This article is just me playing about with math, and showing how to extrapolate in ways that might not be immediately obvious, coming to the obvious conclusion that no, AI can’t memorize chess

Summary

The Licess chess database has 5 billion chess games recorded. There is no evidence of us even scratching the surface of unique chess games. Games are really unique. Even if an AI memorized all 5 billion games, it would help for approximately 4 moves, and then the game would be unique

Background

In 2019, the lichess chess database had 500 million games recorded.

In those games, as of 2019, there were 21.5 billion unique positions, and 76% of all positions were unique. So a game had an average of 32 unique positions. A chess game has only 34-35 moves on average, so any given game is going to start being unique in only a few moves!

But as of writing in Nov 2023, the lichess chess database now has almost exactly ten times more games. Can we extrapolate how many unique positions it has now?

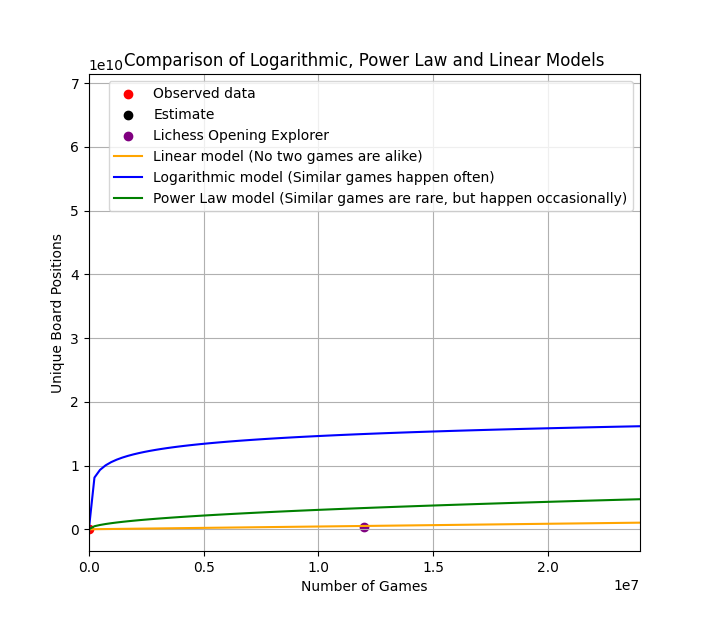

Power Law Approximation (Similar games are rare, but happen occasionally)

It’s not something we can answer without more data, but a reasonable starting point with the assumption that similar games are rare but happen occasionally, is a power law of approximately 0.5. Since we have 10 times more chess games, we can expect approximately sqrt(10) more unique positions, so ~68 million unique board positions in 2014 in 5 billion games.

Logarithmic Approximation (Similar games happen often)

As a conservative lower limit for this curve, we could instead fit logarithmic decay curve, instead of power law, to this using two data points:

- 1 game corresponds to 35 unique positions.

- 500 million games correspond to 21.5 billion unique positions.

So we want to solve:

35 = a log(b + 1)

21,500,000,000 = a log(b * 500000000 + 1)

But Wolfram Alpha timed out trying to solve it.

In python, solve and nsolve failed to solve it, but least_squares cracked it:

from scipy.optimize import least_squares

import numpy as np

# Define the function that calculates the difference between the observed and predicted values

def equations(p):

a, b = p

eq1 = a * np.log(b * 1 + 1) - 35 # Difference for the first data point

eq2 = a * np.log(b * 500_000_000 + 1) - 21_500_000_000 # Difference for the second data point

return (eq1, eq2)

bounds = ([1, 0], [np.inf, 1])

# Initial guesses for a and b

initial_guess = [10000, 1e-8]

# Perform the least squares optimization

result = least_squares(equations, initial_guess, bounds=bounds)

a_opt, b_opt = result.x

cost = result.cost

# Check the final residuals

residuals = equations(result.x)

print(f"Optimized a: {a_opt}")

print(f"Optimized b: {b_opt}")

print(f"Residuals: {residuals}")

print(f"Cost: {cost}")

Gives me: 1757372725 log(0.000411370x + 1)

Which gives ~25 billion unique board positions in 2023 for the conservative lower bound. (See summary for more)

New Data Point found! Lichess Opening Explorer

Lichess released an opening explorer – 12 million chess games, with 390 million unique positions. This gives us a new data point to test which model fits. This is what we have so far:

Let’s zoom in and see which model the lichess opening explorer fits:

The linear model! This means that the database of 5 billion games does not even scratch the surface of the 10^44 possible board positions in any way that we can detect…. although written like that it’s kinda obvious tbh.

Summary and interpretation

In the 2019 Lichess chess database, there were 500 million games, and 75% of game positions were unique,

In the 2023 Lichess chess database, there are 10 times as many games, and so extrapolating:

There are ~68 billion unique board positions using a power scaling law of 0.5. This is an empirical guess which models what we frequently see in nature. With 5000 million games and 35 moves per game, this means that only 0.4% of board positions are unique, and only 1.4% of games have a board position that hasn’t been played before.

There are ~25 billion unique board positions using a conservative lower bound estimate using logarithmic scaling). With 5000 million games and 35 moves per game, this means that only 0.14% of board positions are unique, and only 0.5% of games have a board position that hasn’t been played before.

If a board position can be stored in an average of 8 bytes (64 bits) then this could be stored in 1.4 GB. So easily fit in an over-fitted trained AI. But there are 10^44 possible board combinations. There is no evidence that we are even scratching the surface of being able to memorize

Conclusion: AI could memorize the entire 5 billion chess games, and it wouldn’t help. Probably.. see caveat

Caveat

This whole thing was kinda of a joke, and there’s one big serious flaw with the final conclusion. If the AI plays a move from the database, that’s no longer a random sampling of a game from the database, and the chance of a similar game being played goes way up. But in a way I can’t model. Oh well.

Edit: User Wisskey pointed out that, among other mistakes that I’ve made and fixed, that:

A move in chess corresponds to moves (actually called plies instead of moves) by both sides, so 1 move = 2 chess positions, not 1 chess position.

User: Wisskey

So, I’m probably off by a factor of 2 in places, not that it’s going to matter much to the final conclusion