This is an ongoing multi-year project. I need to write this up properly, but I’ve got so much stuff that I’m going to attack this in stages.

Current Status

14 motors, controlled by a raspberry pi. Arm doesn’t quite work yet. Everything else works.

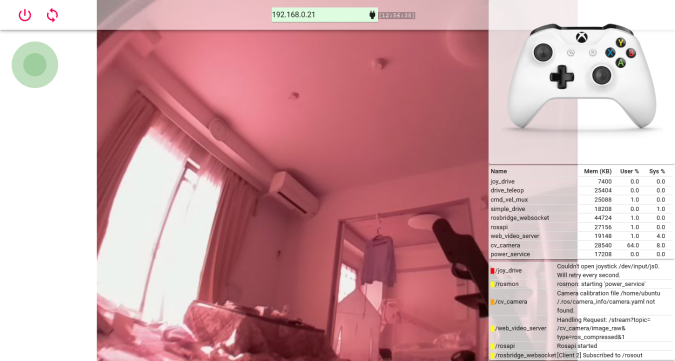

Front end – I wrote this in ReactJS, and it communicates with the robot via a websocket to RobotOS, using ‘rosbridge’. Sorry it’s so pink – it uses infrared LEDs to work at night, but they are kinda overpowering. Also sorry for the mess – I have two children…

In the top left, the green circle is a ‘nipple’ – to let you move the rover about via webpage. Either via mouse or finger press.

In the top right, the xbox controller image shows what buttons are being pressed on an xbox controller, to control the robot. The xbox controller can either be connected to the rover, or connected to the PC / mobile phone – the webpage relays controller commands through to the rover.

Beneath the xbox controller is the list of running processes (“ros nodes”), with cpu and mem information.

Below that is the error messages etc.

Console UI

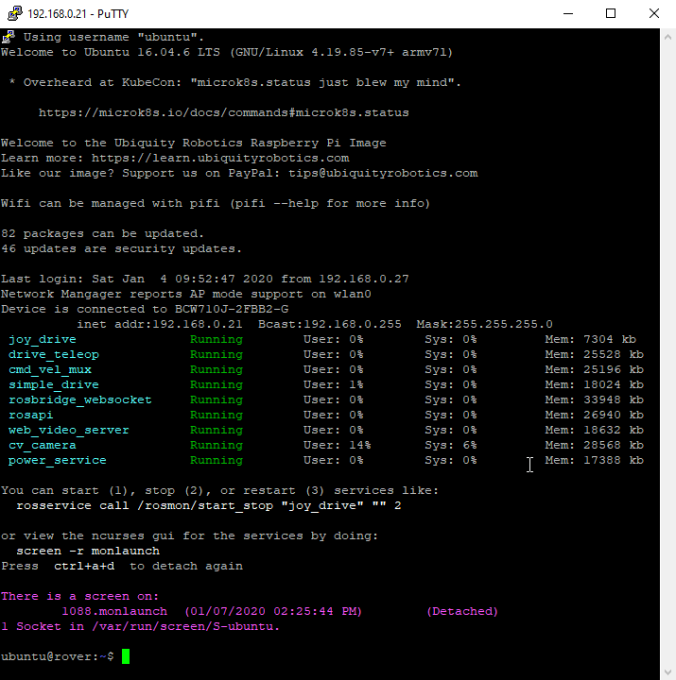

I’m strong believer in trying to make my projects ‘transparent’ – as in, when you connect, it should be obvious what is going on, and how to do things.

With that in mind, I always create nice colored scripts that show the current status.

Below is the output when I ssh into the raspberry pi. It shows:

– Wifi status and IP address

– Currently running ROS Node processes, and their mem and cpu status. Colored Green for good, Red for stopped/crashed.

– Show that ‘rosmon’ is running on ‘screen’ and how to connect to it.

– The command line shows the current git status

I put this script in git in two parts – the first is a bash script to source from bash, and the second is a python script to use ros to get the current status.

See those links for the source code. I think that every ROS project can benefit from these.

Boogie Rocker Design

Ultrasonic Sensor

I played about with making a robot face. I combined a Ultrasonic Sensor (HC-SR04) and NeoPixel leds. The leds reflect the distance – one pixel per 10 of cm.

I’m wondering if I can do SLAM – Simultaneous Location And Mapping with a single (or a few) very-cheap ultrasonic sensor. Well, the answer is almost certainly no, but I’m very curious to see how far I can get.

NOTE: This is an old design – from more than two years ago